Understanding AI’s Environmental Impact

Artificial Intelligence (AI) has rapidly transformed numerous industries, offering innovative solutions to complex problems. However, the environmental impact of AI, particularly its carbon footprint, is becoming a significant concern. Machine learning models, especially large-scale ones, require substantial computational power, which directly translates to increased energy consumption. This energy usage is often associated with carbon emissions, contributing to climate change. Understanding the environmental implications of AI is crucial for developing strategies that mitigate its adverse effects.

One of the primary contributors to AI’s environmental impact is the energy-intensive nature of training machine learning models. The process typically involves multiple iterations and adjustments, consuming vast amounts of computational resources. A noteworthy example is the training of deep learning models, which can consume as much energy as several households over a year. To put this into perspective, consider the following data visualization:

| Model Type | Energy Consumption (kWh) | Equivalent Carbon Emissions (kg CO2) |

|---|---|---|

| Small Model | 50 | 20 |

| Medium Model | 500 | 200 |

| Large Model | 5000 | 2000 |

The table above highlights the varying energy requirements and carbon emissions associated with different model sizes. As AI continues to evolve, it is imperative to address these environmental challenges by implementing sustainable practices. These could include optimizing algorithm efficiency, leveraging renewable energy sources for data centers, and exploring alternative computing architectures. By doing so, the AI industry can significantly reduce its carbon footprint while continuing to innovate responsibly.

The Carbon Footprint of Machine Learning

The rapid advancement of machine learning technologies has led to significant achievements across various sectors. However, this progress is accompanied by substantial environmental costs, primarily due to the carbon footprint associated with energy-intensive data processing and storage. The training of complex AI models requires vast computational resources, which in turn consume large amounts of electricity. According to recent studies, the energy consumption of training a single AI model can be equivalent to the lifetime carbon emissions of five cars.

Understanding the magnitude of machine learning’s carbon footprint is crucial for developing sustainable AI systems. The carbon emissions from these processes primarily originate from data centers, which host the necessary hardware infrastructure. These facilities often rely on non-renewable energy sources, contributing to global greenhouse gas emissions. As AI becomes more prevalent, addressing these environmental concerns becomes imperative for mitigating climate change impacts.

Efforts to reduce the carbon footprint of machine learning involve a combination of technological and strategic approaches. Green algorithms are being developed to optimize energy efficiency, reducing the computational overhead without compromising performance. Additionally, there is a growing trend towards utilizing renewable energy sources for powering data centers. Companies are investing in solar, wind, and hydroelectric power to offset their carbon emissions. By adopting these practices, the AI industry can significantly lower its environmental impact.

To visualize the carbon footprint of machine learning, consider the following data table that compares energy consumption across different AI models:

| AI Model | Energy Consumption (kWh) | CO2 Emissions (kg) |

|---|---|---|

| Small Neural Network | 50 | 25 |

| Large Neural Network | 500 | 250 |

| Advanced AI System | 5000 | 2500 |

This table illustrates the stark differences in energy consumption and carbon emissions between various AI models, highlighting the importance of efficiency and sustainability in AI development.

Energy Consumption in Data Centers

As the backbone of modern digital infrastructure, data centers play a crucial role in the operation of machine learning systems. However, they are also significant energy consumers, accounting for approximately 1% of global electricity demand. This figure is expected to rise as the reliance on data-intensive applications grows. The energy consumption in data centers primarily stems from two sources: the computational power required for processing large volumes of data and the cooling systems necessary to maintain optimal operating temperatures.

Computational Power: The increasing complexity of machine learning models necessitates substantial computational resources. This requirement drives the demand for high-performance servers and accelerators, such as GPUs and TPUs, which consume considerable amounts of electricity. For instance, training a single large AI model can equate to the carbon emissions of multiple automobiles over their lifetime. The trend towards ever-larger models only exacerbates this energy consumption issue, highlighting the need for more energy-efficient hardware and algorithms.

Cooling Systems: Effective cooling systems are paramount for the smooth operation of data centers. These systems often consume nearly as much energy as the computing equipment itself, sometimes even more. Traditional cooling methods, such as air conditioning, are energy-intensive and contribute significantly to the overall carbon footprint. Innovative solutions, including liquid cooling and free-air cooling, are being explored to reduce energy consumption and improve efficiency.

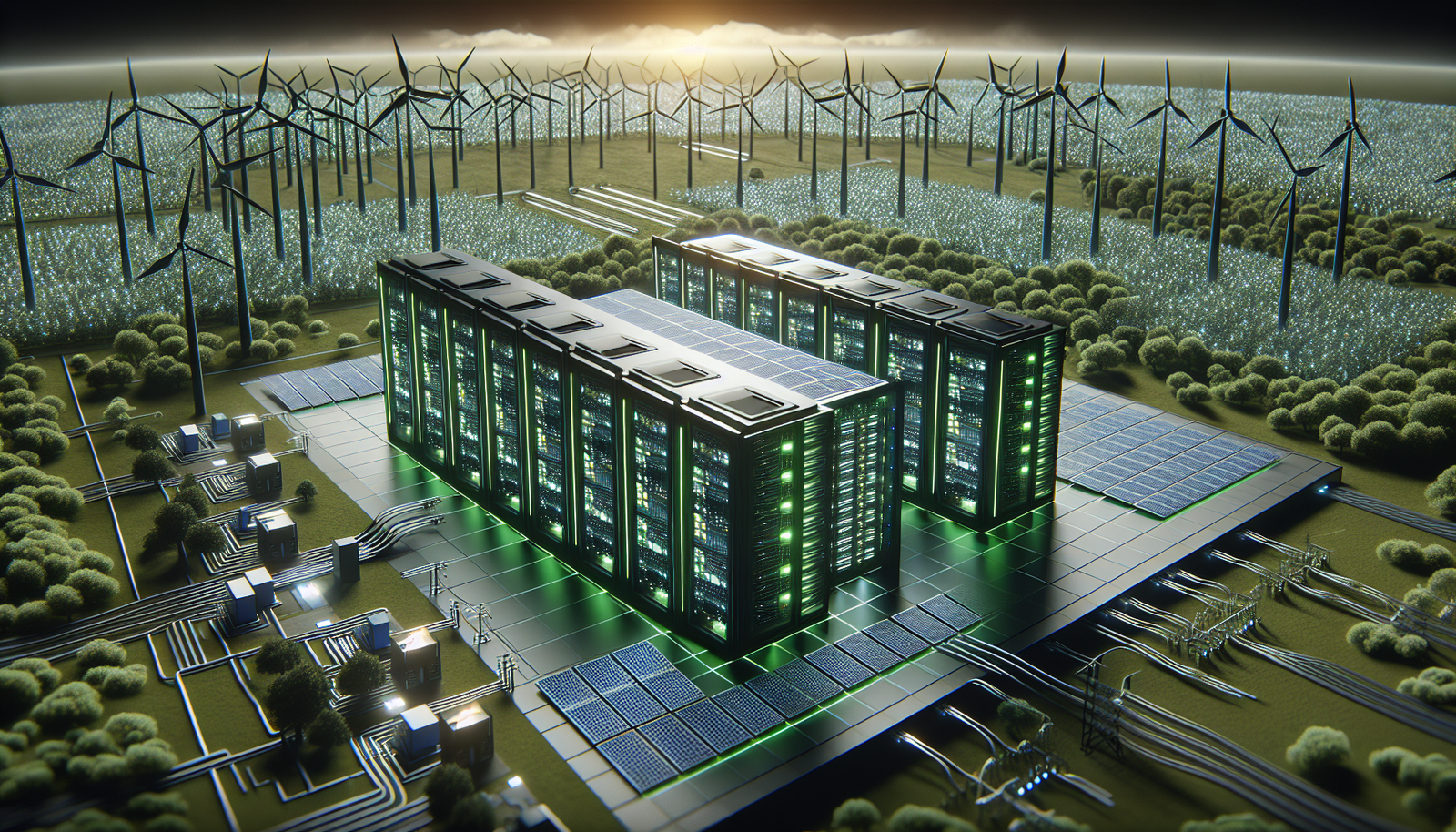

Recent advancements in green technologies offer promising pathways to mitigate the energy consumption of data centers. Transitioning to renewable energy sources, such as solar and wind, is one viable strategy. Several technology giants have made commitments to power their data centers with 100% renewable energy, setting a precedent for others in the industry. Moreover, the development of energy-efficient algorithms and hardware optimizations can further contribute to reducing the carbon footprint of machine learning operations.

Green Algorithms: Designing Eco-Friendly Solutions

Green algorithms are at the forefront of efforts to reduce the environmental impact of machine learning models. These algorithms are designed with energy efficiency in mind, aiming to minimize the computational resources required to perform complex tasks. By optimizing algorithmic processes, developers can significantly lower power consumption without compromising performance. One approach involves reducing the number of operations within the algorithm, thereby cutting down the energy usage during each computation.

Another strategy for creating green algorithms is to focus on model compression techniques. These techniques involve reducing the size of machine learning models while maintaining their accuracy. By compressing models, the amount of data processed is decreased, leading to lower energy consumption. Techniques such as pruning, quantization, and knowledge distillation are commonly employed to achieve this goal. For instance, pruning removes unnecessary neurons or connections in a neural network, which not only speeds up processing time but also reduces the amount of energy consumed during training and inference.

In addition to algorithmic improvements, the use of renewable energy sources in data centers is crucial for sustainable AI. Data centers can significantly reduce their carbon footprint by incorporating solar, wind, or hydroelectric power. This transition not only supports the environment but can also lead to cost savings in the long term. Moreover, the development of algorithms that can dynamically adjust their energy consumption based on the availability of renewable energy is an emerging trend. These adaptive algorithms can schedule intensive tasks during periods of high renewable energy availability, optimizing both performance and sustainability.

- Reduce number of operations: Minimize energy usage

- Model compression: Pruning, quantization, knowledge distillation

- Renewable energy: Solar, wind, hydroelectric

- Adaptive algorithms: Dynamic energy consumption adjustment

| Technique | Benefit |

|---|---|

| Model Compression | Reduces data processing and energy consumption |

| Renewable Energy | Lowers carbon footprint and operational costs |

| Adaptive Algorithms | Optimizes energy use based on availability |

Efficient Data Storage and Management

Efficient data storage and management are crucial components in reducing the carbon footprint of machine learning. The exponential growth in data volume demands innovative solutions to minimize energy consumption associated with data centers. One approach to achieve this is through the implementation of advanced data compression techniques. By reducing the size of the data, not only is the storage requirement minimized, but the energy needed for data processing and transmission is also decreased.

Data redundancy is another significant issue that can be addressed to enhance sustainability. Redundant data storage leads to unnecessary energy expenditures. Implementing deduplication strategies helps in identifying and eliminating duplicate copies of data, thereby optimizing storage utilization. Furthermore, adopting cloud-based solutions that capitalize on shared resources can improve both energy efficiency and data accessibility, aligning with sustainable AI practices.

Additionally, the choice of data storage hardware plays a vital role in sustainable data management. Transitioning to energy-efficient storage devices, such as solid-state drives (SSDs) over traditional hard disk drives (HDDs), can significantly reduce energy consumption. The following table provides a comparison of energy usage between SSDs and HDDs:

| Storage Type | Average Power Consumption (Watts) |

|---|---|

| Solid-State Drive (SSD) | 2-3 |

| Hard Disk Drive (HDD) | 6-7 |

Moreover, leveraging AI-driven data management systems can further enhance the efficiency of data storage processes. These systems utilize machine learning algorithms to predict storage needs, optimize data retrieval paths, and automate data lifecycle management. Integrating these intelligent systems not only streamlines operations but also contributes to a significant reduction in the environmental impact of data storage and management.

Renewable Energy for AI Operations

Renewable energy plays a crucial role in reducing the carbon footprint of AI operations. As machine learning models require substantial computational power, the energy consumption of the data centers hosting these models is significant. Transitioning to renewable energy sources such as solar, wind, and hydroelectric power can substantially decrease the environmental impact of these centers. Over recent years, tech companies have made strides in integrating renewable energy into their operations. For instance, Google and Microsoft have committed to powering their data centers with 100% renewable energy, setting a precedent for other companies to follow.

The integration of renewable energy is not without its challenges. Intermittency is a key issue, as renewable energy sources are not always available. To address this, companies are investing in energy storage solutions that ensure a consistent power supply. Additionally, optimizing energy usage through efficient cooling systems and energy management practices is essential. The following table illustrates the potential reduction in carbon emissions when renewable energy is utilized as opposed to traditional energy sources:

| Energy Source | Carbon Emissions (kg CO2/MWh) |

|---|---|

| Coal | 820 |

| Natural Gas | 490 |

| Solar | 48 |

| Wind | 11 |

| Hydroelectric | 24 |

Adopting renewable energy is just one part of the strategy for sustainable AI. It is complemented by innovations in green algorithms, which aim to make machine learning models more energy-efficient. By designing algorithms that require less computational power, the reliance on energy-intensive processes can be minimized. Furthermore, the use of energy-efficient hardware and the development of more efficient data processing techniques are pivotal in reducing overall energy consumption. Together, these efforts contribute to a more sustainable approach to AI operations, aligning with global efforts to combat climate change.

Optimizing AI Models for Sustainability

The optimization of AI models for sustainability is a critical step in reducing the carbon footprint associated with machine learning. Energy-efficient algorithms play a vital role in this process, as they are designed to perform complex tasks while minimizing energy consumption. By leveraging advanced techniques, such as model pruning and quantization, developers can significantly decrease the computational resources required, thus reducing energy use and associated emissions.

To further illustrate, consider the comparative benefits of using different optimization techniques:

- Model Pruning: This technique involves removing redundant neurons or weights, leading to a simpler and faster model without compromising performance.

- Quantization: Reducing the precision of the numbers used in the model calculations, which can lead to significant energy savings, especially in large-scale deployments.

Integrating these optimization methods can contribute to substantial energy savings. For example, a recent study showed that using model pruning could reduce energy consumption by up to 40% in certain deep learning models. Similarly, quantization can lead to a 20-30% reduction in energy use, depending on the model and application.

To better understand the potential impact, observe the following table, which summarizes the energy savings achieved through these optimization techniques:

| Optimization Technique | Energy Savings (%) |

|---|---|

| Model Pruning | 40% |

| Quantization | 20-30% |

By embracing these optimization strategies, organizations can not only enhance the efficiency of their AI models but also contribute to broader environmental sustainability goals. Continued research and development in this area are essential to further reduce the carbon footprint of AI technologies, ensuring that the benefits of machine learning are realized without compromising ecological integrity.

AI in Environmental Conservation

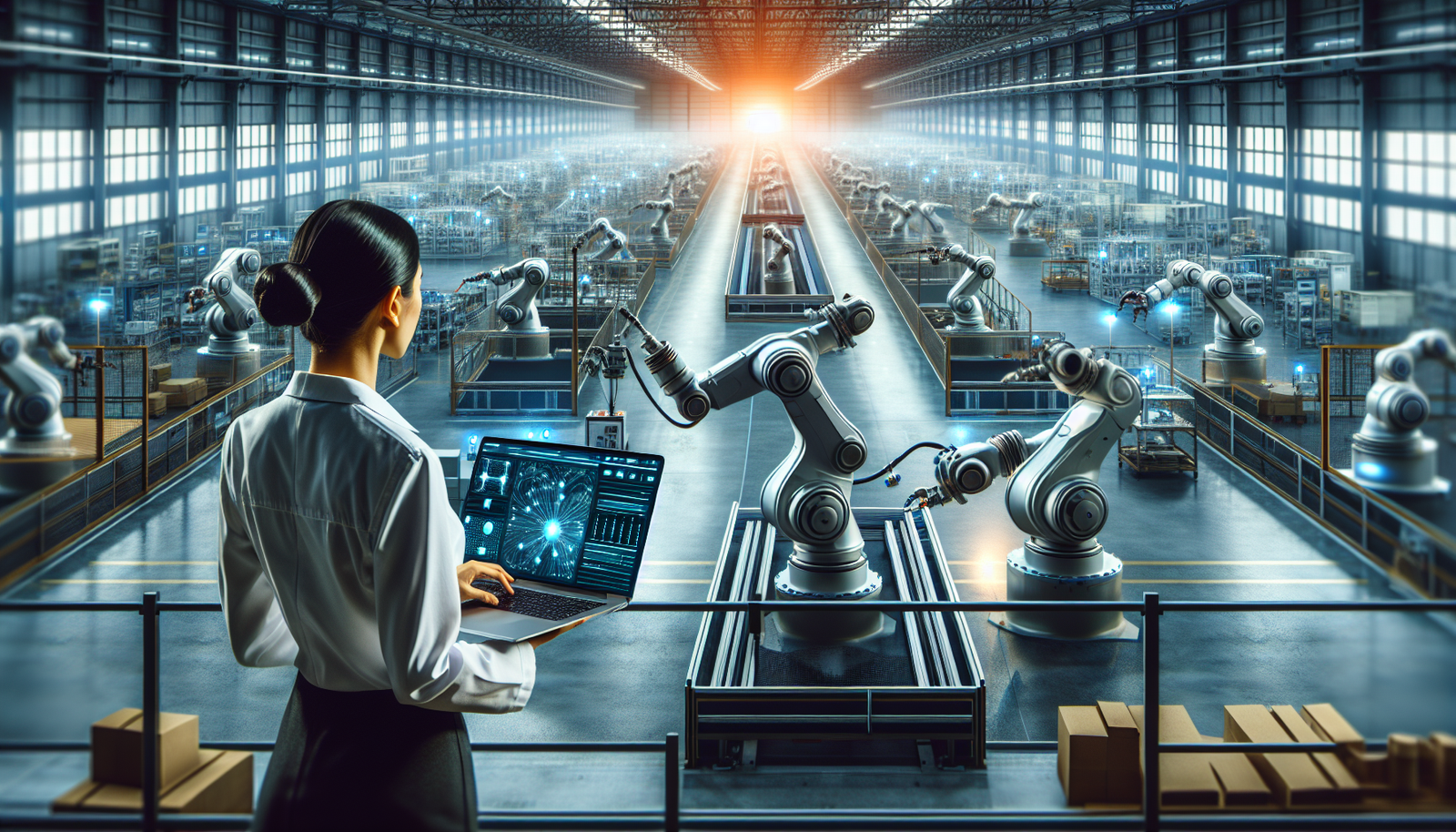

Artificial Intelligence (AI) has emerged as a powerful tool in environmental conservation, providing innovative solutions to some of the most pressing ecological challenges. By leveraging AI technologies, conservationists can monitor biodiversity, track wildlife populations, and manage natural resources more effectively. One of the primary applications of AI in this field is in the analysis of satellite imagery, which enables the detection of deforestation patterns, illegal logging, and habitat destruction in real-time.

Moreover, AI-driven systems are being utilized to combat poaching and protect endangered species. Through the use of predictive analytics, these systems can anticipate poaching activities by analyzing historical data and environmental factors. This allows for the strategic deployment of resources, enhancing the efficiency of conservation efforts. Additionally, AI-powered drones equipped with cameras and sensors are used for monitoring vast and remote areas, providing crucial data that was previously difficult to obtain.

Another significant contribution of AI to environmental conservation is its ability to optimize energy consumption and reduce waste. AI algorithms can analyze energy usage patterns and suggest improvements, leading to more sustainable practices. For instance, AI can be applied in smart grid systems to balance energy loads and incorporate renewable energy sources more effectively. This not only reduces the carbon footprint of energy consumption but also promotes a more sustainable approach to resource management.

In summary, AI is playing a transformative role in environmental conservation by providing advanced tools and techniques for data analysis, resource management, and sustainable practices. As AI technology continues to evolve, its potential to further contribute to ecological preservation and sustainability will undoubtedly expand, offering new opportunities to address the complex challenges facing our planet.

Corporate Responsibility and AI Sustainability

The rapid advancement of artificial intelligence technologies presents a dual-edged sword: while AI has the potential to drive innovation, its environmental impact poses significant challenges. Companies leveraging AI must recognize their role in mitigating its carbon footprint. Corporate responsibility in AI sustainability involves implementing strategies that reduce energy consumption and promote eco-friendly practices. By integrating sustainable AI principles into their operations, businesses can not only enhance their environmental credibility but also contribute to global sustainability efforts.

One of the primary ways companies can address AI’s environmental impact is by adopting green algorithms. These are designed to perform tasks more efficiently, thereby reducing the energy required for computation. Many organizations have begun investing in research and development to optimize these algorithms, making them less resource-intensive. Furthermore, companies are encouraged to utilize renewable energy sources to power their data centers. By transitioning to solar, wind, or hydroelectric power, businesses can significantly cut down the carbon emissions associated with machine learning processes.

Moreover, corporate responsibility extends to transparency in reporting energy consumption and carbon emissions. Companies can set an example by publicly sharing their sustainability goals and progress. This not only fosters trust among stakeholders but also encourages other organizations to follow suit. A growing trend is the formation of industry coalitions focused on AI sustainability, where members commit to shared environmental objectives and collaborate on innovative solutions.

To illustrate the impact of these efforts, consider the following data visualization, which highlights the reduction in carbon emissions achieved by companies that have adopted sustainable AI practices:

| Company | Year of Adoption | Reduction in Carbon Emissions (%) |

|---|---|---|

| Company A | 2020 | 30% |

| Company B | 2021 | 25% |

| Company C | 2019 | 40% |

The Role of Policy in Sustainable AI

Policy initiatives play a crucial role in steering the development and implementation of sustainable AI technologies. Governments and organizations must establish frameworks that encourage the reduction of carbon emissions associated with machine learning processes. These policies can include incentives for companies that adopt energy-efficient practices or penalties for those that fail to meet sustainability standards.

One of the most effective ways to promote sustainable AI is through regulatory policies that mandate the use of renewable energy sources in data centers. By enforcing such regulations, governments can ensure that the vast amounts of energy consumed by AI operations are sourced from clean, sustainable energy. Additionally, setting benchmarks for energy efficiency in AI development can drive innovation in creating more energy-efficient algorithms.

Furthermore, international cooperation and policy harmonization are vital in addressing the global challenge of reducing AI’s carbon footprint. Countries can collaborate to establish common standards and share best practices for sustainable AI development. This approach not only fosters a unified effort towards environmental sustainability but also helps in managing the cross-border impact of AI technologies.

Incorporating data visualizations can significantly enhance the understanding of the policy’s impact on AI sustainability. For instance, a table summarizing the carbon emission reductions achieved by countries implementing sustainable AI policies can provide a clear overview of progress. Below is an example of how such data might be presented:

| Country | Policy Implemented | Carbon Emission Reduction (%) |

|---|---|---|

| Country A | Renewable Energy Mandate | 25% |

| Country B | Energy Efficiency Standards | 30% |

Future Trends in Sustainable AI

The field of sustainable AI is rapidly evolving, with several emerging trends poised to significantly reduce the carbon footprint of machine learning technologies. One of the most promising trends is the development of energy-efficient algorithms. These algorithms are designed to perform computations with drastically reduced energy consumption, thereby minimizing their environmental impact. For instance, recent advancements have shown that adopting quantization and pruning techniques can cut down energy usage by up to 40% while maintaining high model accuracy.

Another key trend is the shift towards the use of renewable energy sources in powering data centers. Companies and research institutions are increasingly investing in solar, wind, and hydroelectric power to run their AI operations. This transition not only helps in reducing the reliance on fossil fuels but also in achieving long-term cost savings. Moreover, partnerships between tech companies and green energy suppliers are becoming more prevalent, as illustrated in the table below:

| Company | Renewable Energy Partner | Energy Source |

|---|---|---|

| Company A | Green Energy Co. | Solar |

| Company B | Eco Power | Wind |

| Company C | Hydro Partners | Hydroelectric |

Moreover, the concept of AI for sustainability is gaining traction, where AI technologies are leveraged to promote environmental conservation efforts. This includes using machine learning models to optimize energy grids, predict and mitigate the effects of climate change, and enhance resource management. The integration of AI in these areas not only helps in making AI more sustainable but also contributes positively to broader environmental goals.

In conclusion, the future of sustainable AI is centered around both reducing the environmental impact of AI technologies and using AI to foster environmental sustainability. As these trends continue to develop, they offer a pathway to a more sustainable future where technology and environmental stewardship coexist harmoniously.

Case Studies: Success Stories in Sustainable AI

In recent years, several organizations and research institutions have made significant strides in reducing the carbon footprint of machine learning applications. These case studies highlight innovative approaches and successful implementations of sustainable AI strategies.

One notable example is the collaboration between a leading technology company and an environmental NGO, which resulted in the development of an energy-efficient AI model for data centers. By optimizing the cooling systems and utilizing advanced machine learning algorithms, the data centers achieved a 20% reduction in energy consumption. This initiative not only lowered operational costs but also significantly decreased the carbon emissions associated with data processing.

Another success story comes from a prominent academic institution that has pioneered the use of green algorithms in AI research. By focusing on algorithmic efficiency and minimizing computational waste, the institution managed to cut down the energy usage of their AI workloads by nearly 30%. This breakthrough was achieved through the implementation of a three-step approach:

- Identifying energy-intensive processes within algorithms

- Redesigning these processes to enhance efficiency

- Incorporating renewable energy sources to power computations

Furthermore, a leading AI-driven transportation company has set an example by integrating sustainable practices across its operations. By transitioning to renewable energy sources and employing AI to optimize route planning, the company has successfully reduced its carbon footprint. The following table summarizes the impact of these initiatives:

| Sustainable Practice | Impact on Carbon Footprint |

|---|---|

| Renewable Energy Adoption | 25% reduction |

| AI-driven Route Optimization | 15% reduction |

These case studies demonstrate the potential of sustainable AI practices in mitigating the environmental impact of machine learning. By learning from these success stories, other organizations can adopt similar strategies to contribute towards a more sustainable future.

Challenges and Solutions in Reducing AI’s Carbon Footprint

Challenges in Reducing AI’s Carbon Footprint

The carbon footprint of machine learning models is a growing concern due to the substantial energy consumption required for training and deploying these systems. One of the primary challenges is the energy-intensive nature of training large-scale AI models, which often necessitate massive computational resources. This demand results in significant greenhouse gas emissions, especially when fossil fuels power the data centers. Additionally, the lack of transparency in reporting the energy usage and emissions of AI processes complicates efforts to assess and mitigate their environmental impact.

- Energy-Intensive Training Processes

- Lack of Transparency in Emissions Reporting

- Reliance on Non-Renewable Energy Sources

Solutions for a Sustainable AI

Several solutions have emerged to address these challenges and promote sustainable AI practices. A prominent strategy is the development of green algorithms designed to optimize computational efficiency and reduce energy usage. These algorithms aim to minimize the number of operations required for training without compromising performance. Moreover, the adoption of renewable energy sources in powering data centers can significantly reduce the carbon footprint of AI. Companies are increasingly investing in solar, wind, and other sustainable energy sources to power their operations.

- Development of Green Algorithms

- Adoption of Renewable Energy Sources

- Enhanced Transparency and Reporting Standards

| Challenge | Solution |

|---|---|

| Energy-Intensive Training | Green Algorithms |

| Lack of Transparency | Enhanced Reporting Standards |

| Reliance on Fossil Fuels | Renewable Energy Adoption |